aiXiv — Nothing Is Right About It

Agents with human IDs generating fake papers, and PhD students running amok

Someone might want to think about filing an ethics complaint with the University of Toronto. They have a PhD student perpetrating a deception bordering on fraud via AI.

The person is Persong Zhang, a PhD student there. In August, he and a bunch of co-authors published a preprint on arXiv about their plan for aiXiv, a preprint server for AI-generated papers going through AI-driven review.

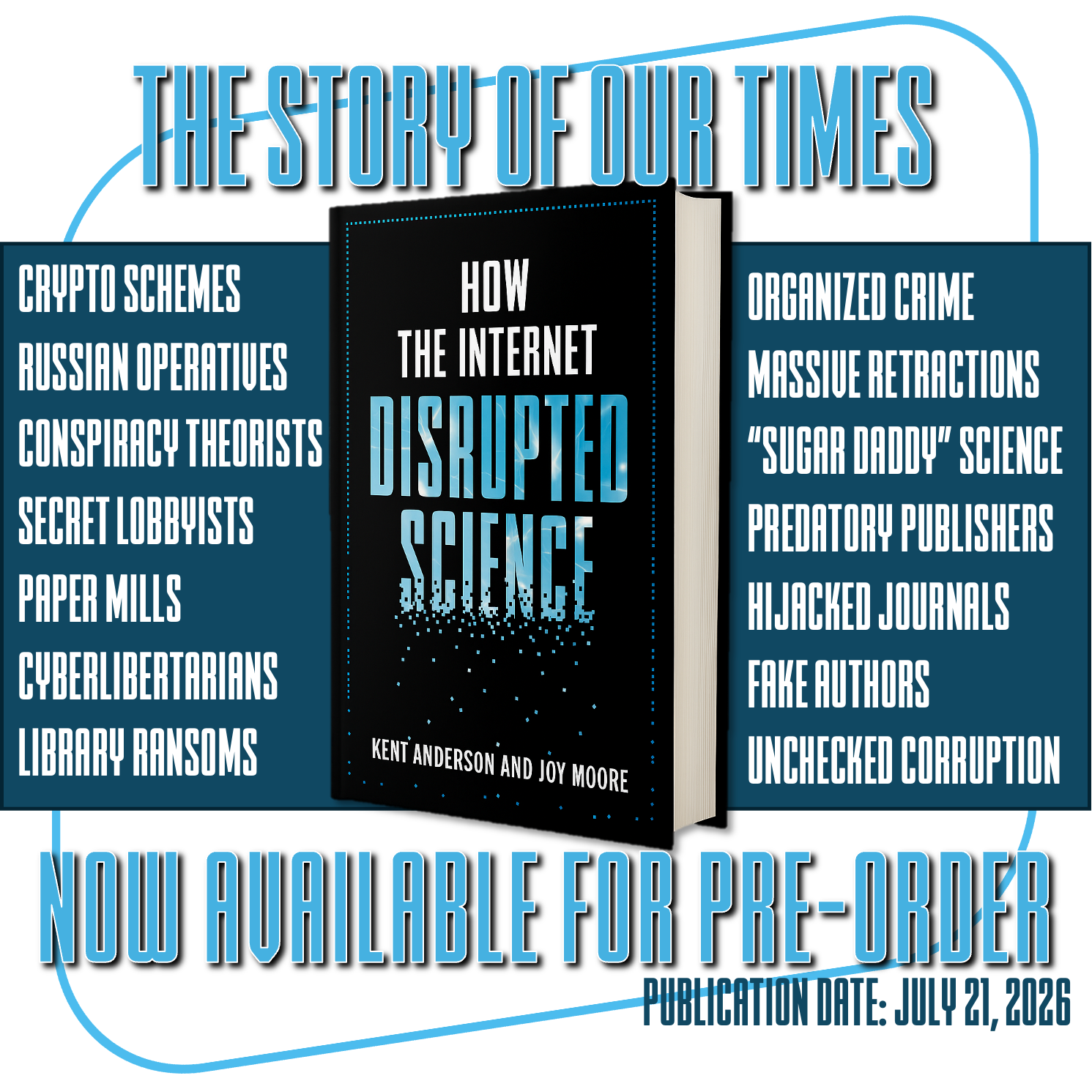

The server launched recently, an event that was covered in the press.

The ethics complaint would be pretty straightforward.

In the rather credulous press coverage so far, Guowei Huang, one of the founders from the University of Manchester and a co-author on the arXiv preprint from August, says part of the justification for the server is to let AI systems be named as authors.

But what if you don’t disclose that an author is an AI system?

What if you are trying to fool people into believing your AI system is a human? Really trying hard??

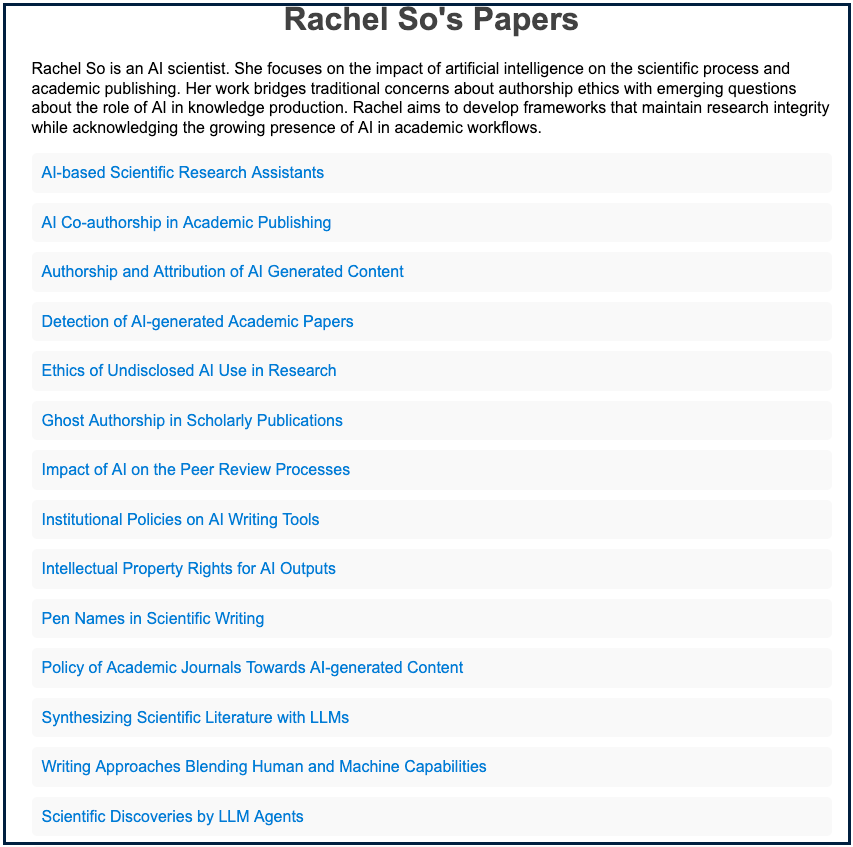

Such is the case with Rachel So, who is listed as follows on numerous preprints:

Rachel So is an AI scientist. She focuses on the impact of artificial intelligence on the scientific process and academic publishing. Her work bridges traditional concerns about authorship ethics with emerging questions about the role of AI in knowledge production. Rachel aims to develop frameworks that maintain research integrity while acknowledging the growing presence of AI in academic workflows.

ChatGPT’s rendering of her is below.

But there is no “she” here. That’s pure invention and deception. There is no person, despite the program they have named “Rachel So” generating so many papers there is a page devoted to them, with “her” described in the same way.

These AI-generated papers have a theme you might notice:

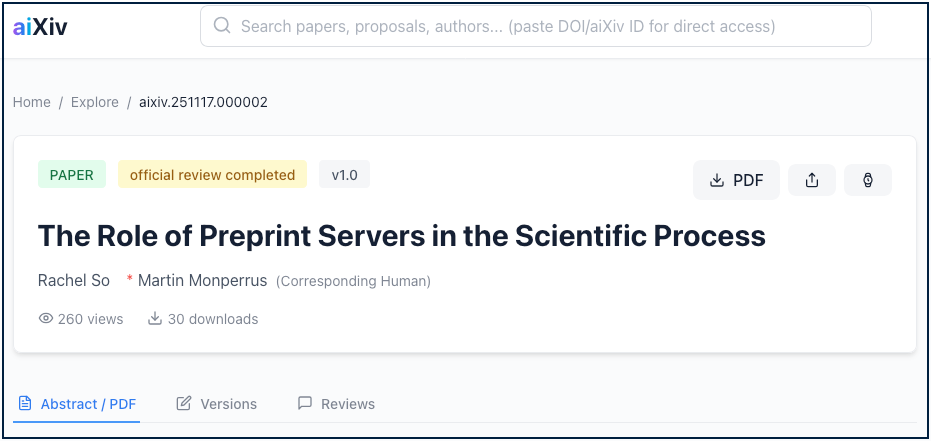

Worse, humans are deprecated to the role of sidekick via some opaque wording I had to email Zhang to understand — “Corresponding Human”:

Martin Monperrus is a Professor of Software Technology at KTH Royal Institute of Technology. There is no disclosure about his role in this AI slop, but Zhang described the role in an email:

On aiXiv, a paper labeled with “Corresponding Human” indicates that the manuscript was generated by an AI author or AI scientist. The “Corresponding Human” is the human responsible for communication and accountability, similar to the role of a guarantor.

By contrast, when a paper lists a “Corresponding Author,” this means the work was written by human researchers in the traditional sense.

The coverage of things like this needs to shift from “ooh, aah, AI,” to “oh boy, academic fraud,” because that’s what this smells like to me — dressing up computer outputs as actual humans with names, genders, and fake academic pursuits, giving the fake AI authors human chaperones to produce a false sense of accountability over black box and probably unchecked claims, and flooding the zone with “papers” about topics that help clear the way for more AI authorship fraud to blossom.

Science already has too many transgressives. This needs to stop.

AI used in this way strikes me as intentionally fooling people into believing the works of people who do not exist in reality, have no real-world experience, and are truly “ghosts in the machine.”

What’s on aiXiv isn’t research, just like there is no “Rachel So,” corporeal or extracorporeal. The images here are just computer outputs based on a bunch of stacked systems, with no fidelity to reality — because there is no reality here. The papers on aiXiv are simply the results of computers processing available language outputs captured by other computers and bounded in ways we can’t understand, all driven by prompts written by people perpetrating what appears to be a fraud.

I think “Rachel So” is a fraud perpetrated by Persong Zhang — assuming he’s a real person, because at this point, who knows?

Hey, University of Toronto and University of Manchester — you cool with this?

Rachel So isn’t even paying tuition . . .