Are Platforms Fixing Things, Too?

Two platforms make strong moves in order to restore sanity and protect children

In a particularly interesting section of Andrew McCabe’s excellent new book, “Threat: How the FBI Protects America in the Age of Terror and Trump,” he writes about our upside-down information landscape in a particularly evocative way:

Americans have freer access to more information than at any other time in the history of our country. What happened when we were let loose on that landscape of possibility? People raised their voices, louder all the time, and the boundaries of the landscape we had known wore down as volumes rose. The country started seeming like a village in a folktale under a spell, where the more people see, the less they know.

We are under a spell, and we can’t seem to shake its effects.

A major source of witchcraft has emanated from the irresponsible ways platforms like Facebook, YouTube, and others have been allowed to manage information. Without the typical liabilities of media companies due to regulations from the 1990s that treat platforms as neutral conduits of information, CEOs were able to build information services without fear of reprisals, lawsuits, or meaningful regulation. As a result, the past decade has been an information free-for-all during which these companies developed behavior modification systems to support targeted advertising and make billions, while nefarious actors learned how to manipulate these platforms for their own purposes.

Since 2016 — when such information attacks led to Brexit and Trump, mainly — these platforms have been forced to do some soul-searching.

Two actually found something, and are now trying to break the spell by placing limits on discoverability and access.

Pinterest’s recent announcement that it would no longer allow users to search on terms related to anti-vaccination messages is one of these. With anti-vaccination messages having now led to more measles outbreaks, as well as to young adults who know their parents were misinformed or misled and now seek vaccinations for themselves, Pinterest did the responsible thing, and limited discoverability in order to choke of anti-vax messaging.

While imperfect — the Pinterest solve didn’t work initially in some languages, and can’t work on some media — the move shows how a platform can behave responsibly toward the content it hosts and serves up.

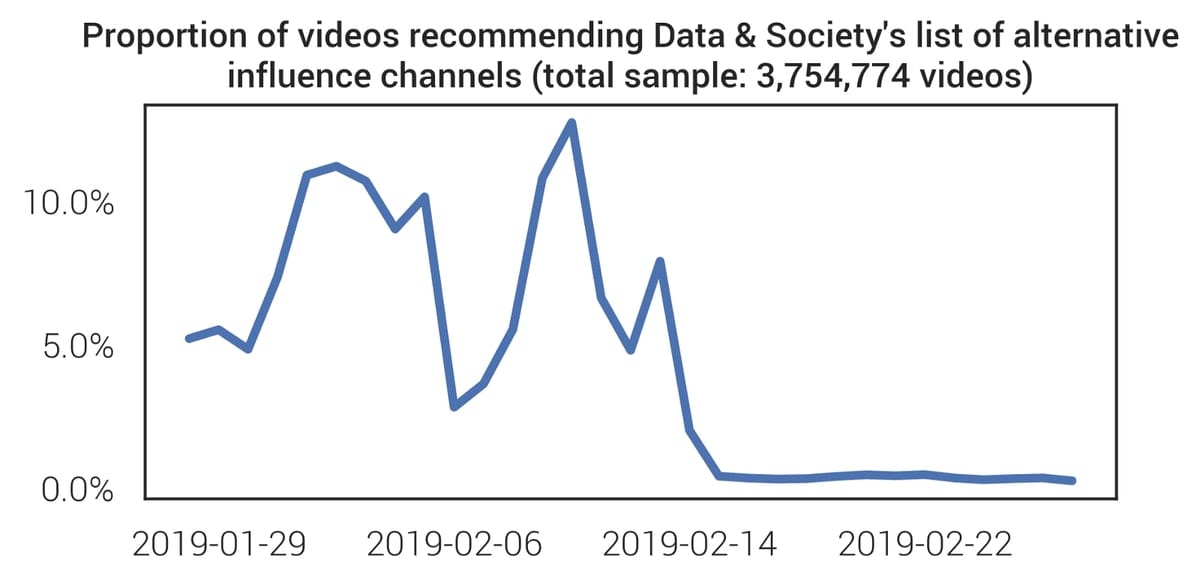

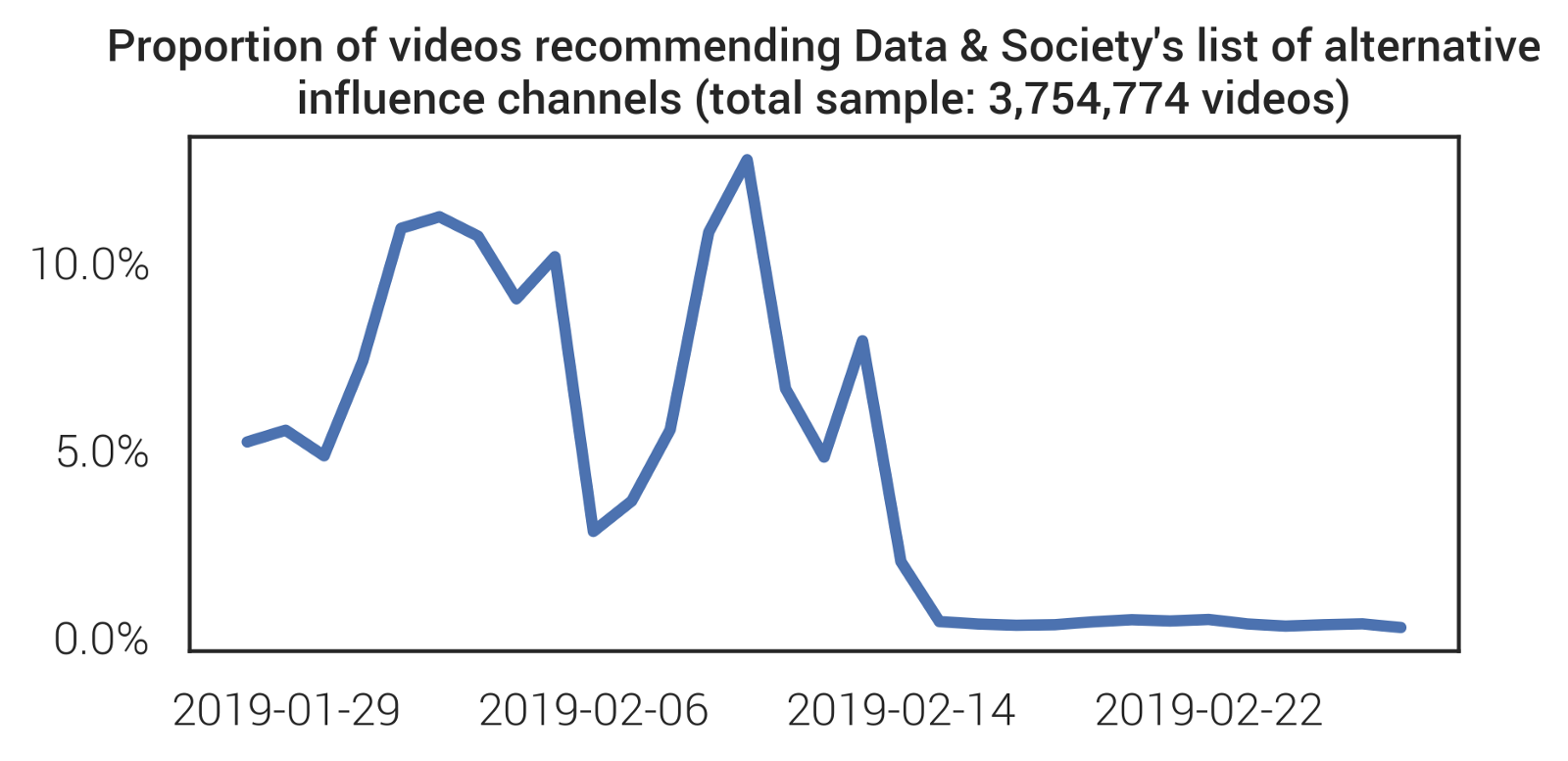

Pinterest is not alone. YouTube has suddenly stopped recommending alt-right videos. In an analysis by Nicolas Suzor and colleagues, they found the trend in recommendations across 3.7 million videos’ recommendations and their reference of alt-right content:

As Suzor writes:

Our preliminary results are stunning. For the first two weeks of February, YouTube was recommending videos from at least one of these major alt-right channels on more than one in every thirteen randomly selected videos (7.8%). From February 15th, this number has dropped to less than one in two hundred and fifty (0.4%).

YouTube also recently made another change — preventing comments on videos of children, in order to prevent pedophiles from leaving inappropriate comments. It will take YouTube several months to disable comments on all videos featuring minors, but it turned off comments from tens of millions of videos last week alone.

On the other hand, Facebook has been notoriously avoiding responsibility for what has occurred on its platform — from health misinformation to psychological influence to data intrusion.

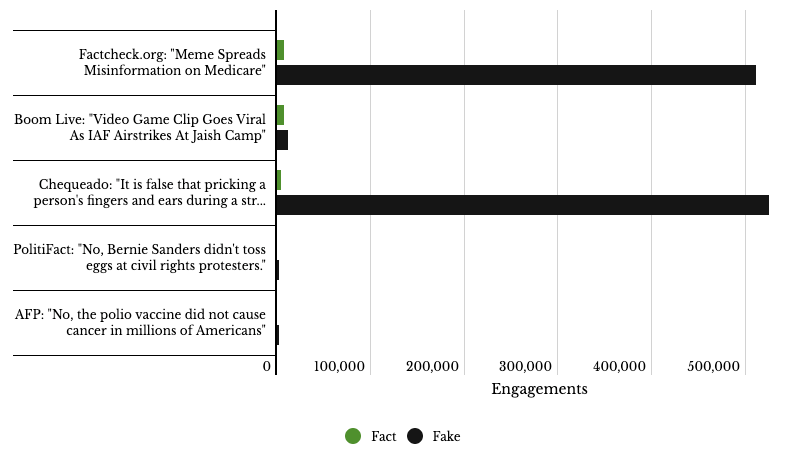

Facebook’s health misinformation is still rampant, with the reach of facts lagging far behind the reach of hoaxes, according to one recent analysis:

Hoax claims spread widely and quickly compared to factual refutations via Facebook, and the company has shown nearly zero willingness to changes its ways.

These early moves by Pinterest and YouTube are promising. Consumer pressure seems to matter, and responsible technology leaders may be stepping forward. A question that may come up soon is since these platforms are demonstrating they have the ability to manage their content, should they also be subject to the same liabilities as any other media company?

Let’s hope things keep moving in the right direction, and that platform providers continue to take responsibility for the information they manage.