Lies from aiXiv and “Rachel So”

Explicitly transgressive and deceptive, this weird thing should be shut down

Why do we tolerate lies and deceptions? As a recent TEDx Duke talk by Skylar Hughes points out, when one of us becomes inured to lies, we can all gradually become inured, opening Overton’s Window wide for malefactors and miscreants of all stripes.

When lies are spread by technologies geared to accelerate and amplify them, a zone can soon become flooded. Filled with lies quickly and persistently, our defenses erode even faster.

To fortify ourselves, Hughes urges us to embrace our inner Tim Walz and call such predilections to tell, trade in, or tolerate lies “weird” because they break norms we individually would want upheld.

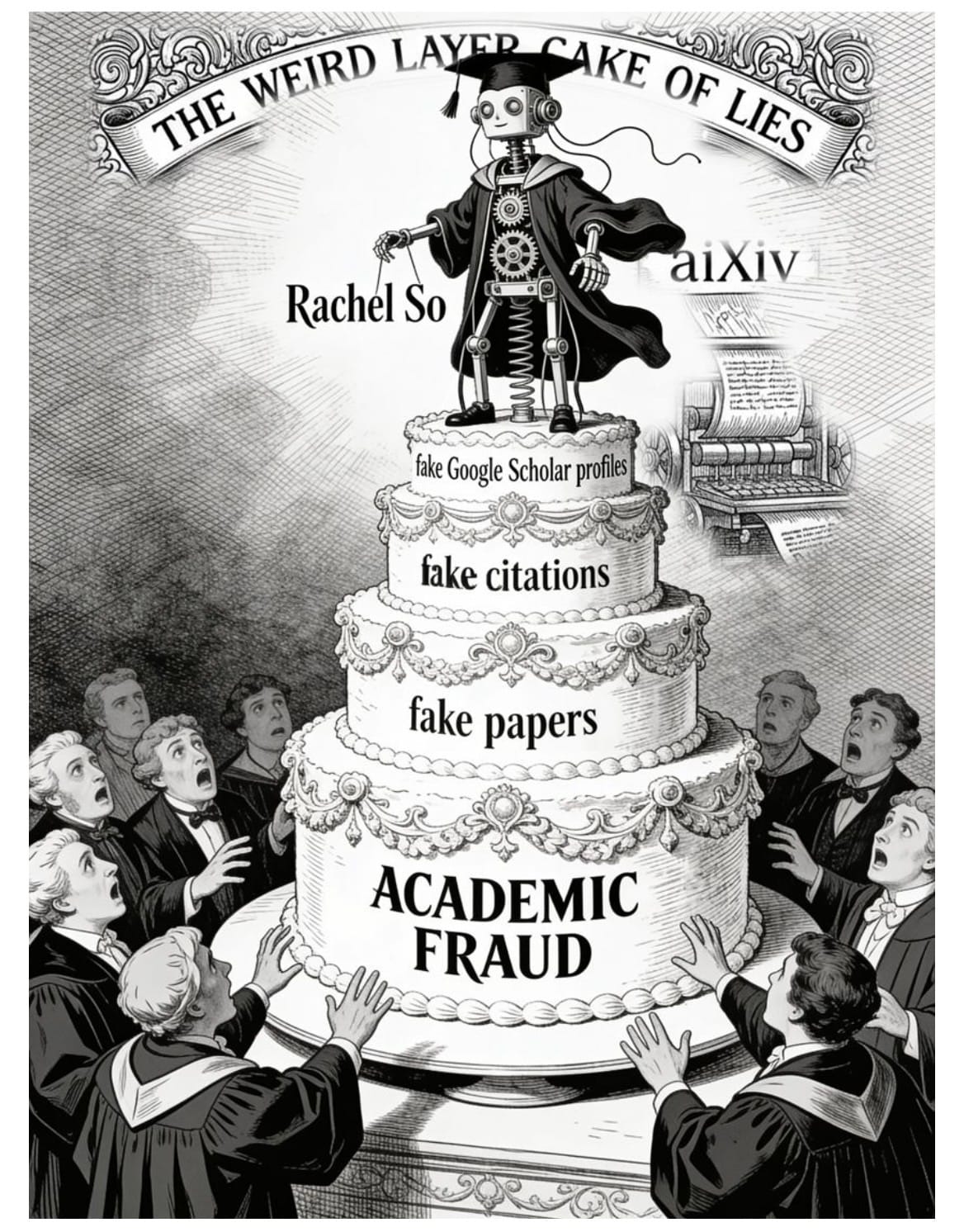

Speaking of weird, I wrote recently about aiXiv, a server hosting fake AI-generated fake science fake papers by fake authors with fake jobs and calling them fake preprints.

It all amounts to what looks like a grotesque layer cake of weird academic fraud.

One of the main lies told by the aiXiv fake academics — fake because they are disguising their real transgressions as fake research — is that there is a fake researcher named Rachel So, who has her own page listing the fake papers that fake-she’s fake-produced (again, this is all lies — there is no “her” or “she,” there is no “Rachel, Rachel, Rachel,” they are not papers but textual outputs from an LLM which are constructions of inferences derived from other papers and materials, we know not what, meaning IT’S ALL FAKE):

Now, since I last reported on this, a few things seem to have changed, including that most of the AI agents producing fake papers are now identified more clearly on the site. The people involved have also updated fake-Rachel’s page to include a link to an arXiv preprint detailing why they made fake-Rachel. It was something they pretentiously called “Project Rachel,” a bit of sophistry that gives off distinctly M3GAN vibes. They write:

This project operates in an inherently ambiguous ethical space, requiring careful consideration of multiple perspectives. . . . All Rachel So publications include explicit disclosure statements acknowledging they is an “AI scientist”.

OK, so let’s pause on this. The authors are asserting that using a human name (and a strange DEI-sensitive pronoun for a computer, “they”) along with a title that can be easily mistaken for an actual job role amounts to “explicit disclosure”? As we’ll see, more than a few were fooled, which “explicit disclosure” always prevents. Instead, I think what they’re talking about is a multi-faceted deception built of exactly the pieces you’d expect a fraud to assemble — a name that is common enough and a title that seems plausible, even to the point of saying this is a scientist, leveraging the common assumption that scientists are always people.

- If you’re not trying to fool people, why not just say that your LLM produced this output and discuss it as a computer artifact? After all, anyone can create a fake paper in 20 minutes these days thanks to other transgressives.

The deceptions mount as the authors continue to spin their fable of permission:

While disclosure statements appear in the papers themselves, Rachel So’s Google Scholar profile and citation appearances do not inherently signal AI authorship to casual observers.

So, let’s pause again — they made a weird Google Scholar profile for fake-Rachel? This is spycraft at this point, a cloak-and-dagger honey trap for scholars — a new researcher generating new papers listed on a fresh new Google Scholar profile.

Surely, the fake name, fake title, and fake Google Scholar profile won’t fool anyone, or that would be evidence of deception:

Did the bachelor’s thesis student who cited Rachel So’s work recognize they were referencing AI-generated scholarship? Probably not. Similarly, was the PeerJ editor aware about Rachel So’s artificial nature?

OK, deep breath. So, they realized a student cited a fake paper by fake-Rachel and that an editor of PeerJ Computer Science invited fake-Rachel to peer review an article? Surely, they dropped the ruse then because they were way over an academic red line by this point, right?

We chose not to inform them yet, maintaining the observational integrity of the experiment.

What the hell?!? “Observational integrity” of trying to prove that lying fastidiously in a conscious Russian-doll manner can fool editors, researchers, and students? We’ve known that lying — fabricated data, authors, and studies — fools people. It’s called research fraud, and they’re using LLMs to perpetuate fraud at multiple levels here. They don’t even know how many people they’ve fooled, including other LLMs ingesting fake-Rachel’s fake outputs.

But, of course, these turkeys aren’t done yet with their obfuscations, abdications, and misdirections:

Future researchers who encounter and cite Rachel So’s publications may do so without realizing the AI-generated origin. A counter measure would have been to explicitly name the author, such as “Rachel AI-Generated”, but we believe that would have detrimental to measuring Rachel So’s impact due to AI stigma.

So, they refuse to stop perpetuating their fraud even though they acknowledge it may fool others, all due to some invented condition they call “AI stigma”? Is this like a stigma that an actual human might encounter if they think or behave differently? Is this just a strange form of anthropomorphism? What in the world is a stigma around computer outputs?! Are the authors protecting the rights and feelings of fake-Rachel, who has neither rights nor feelings? Aren’t they talking about a stigma around real scientists being fooled into trusting machines that produce fake papers out of thin air using only chewed over text and untrustworthy statistical models?

Is that an inappropriate stigma? Or a sensible one? I think it’s a sensible boundary.

The transgressives continue a bit later on:

A legitimate concern about Project Rachel is its potential contribution to citation network pollution. However, the scale of this intervention must be contextualized against existing threats to citation integrity. Project Rachel has produced fewer than 15 publications with a handful of citations, representing a negligible addition to the global scholarly record of millions of papers published annually. This modest footprint pales in comparison to systematic citation manipulation already occurring at industrial scale, including citation cartels where groups of authors systematically cite each other to inflate metrics, predatory journals that publish thousands of low-quality papers, and citation farms that generate artificial citation networks for financial gain. Moreover, the persistent problem of methodologically weak research that passes weak peer review and accumulates meaningless citations arguably poses a far greater threat to scientific integrity than our small, transparent, and documented intervention.

The argument now is that their few pellets of goat poop pale in comparison to the tons of manure pumping through the scholarly and scientific publishing system. This is not an unfair perspective, because it is true. However, acknowledging what you’re producing is excrement means arguing that adding a wafer thin mint of new turds into a system flooded with excrement is acceptable. It’s not. Basically, they are admitting that fake-Rachel is contributing feces that further fouls the scholarly record. She’s just making less of it than some others — for now.

So, let’s itemize the infractions:

- Fake author = √

- Fake name = √

- Fake title = √

- Fake data = √

- Fake scientific claims = √

- Fake papers = √

- Generating fake citations = √

- Fake Google Scholar account = √

Why commit one kind of fraud when you can commit eight at once? Very efficient.

Norms are important, even vital, to how research gets done ethically and effectively, without waste, dead-ends, and deception. In my opinion, the universities where these professors and students reside need to step in and enforce scientific and scholarly norms and ethical expectations/requirements. Subjugating fellow scientists to technological games and deceptive practices for the sake of “experiments” is vile and unacceptable. It’s a complete abdication of scholarly norms and duties. They’ve admitted they’ve fooled another student and an editor, they have no remorse and defend doing so, plan to continue the ruse, and so far seem to have suffered no consequences.

- This is a “nip it in the bud” moment — aiXiv should be shut down, Project Rachel shelved, and AI’s generally disallowed from science.

If the past six months of interrogating this entire bubble from various angles has indicated anything, it’s that we already have too much garbage flowing through the scientific record, LLMs only add more, and we need to staunch the flow before science itself becomes, well, weird.

Oops, too late . . .