Altmetric Devalues Twitter, Tells Nobody

Altmetric slashes its biggest source's contribution, neglects to tell even its own staff

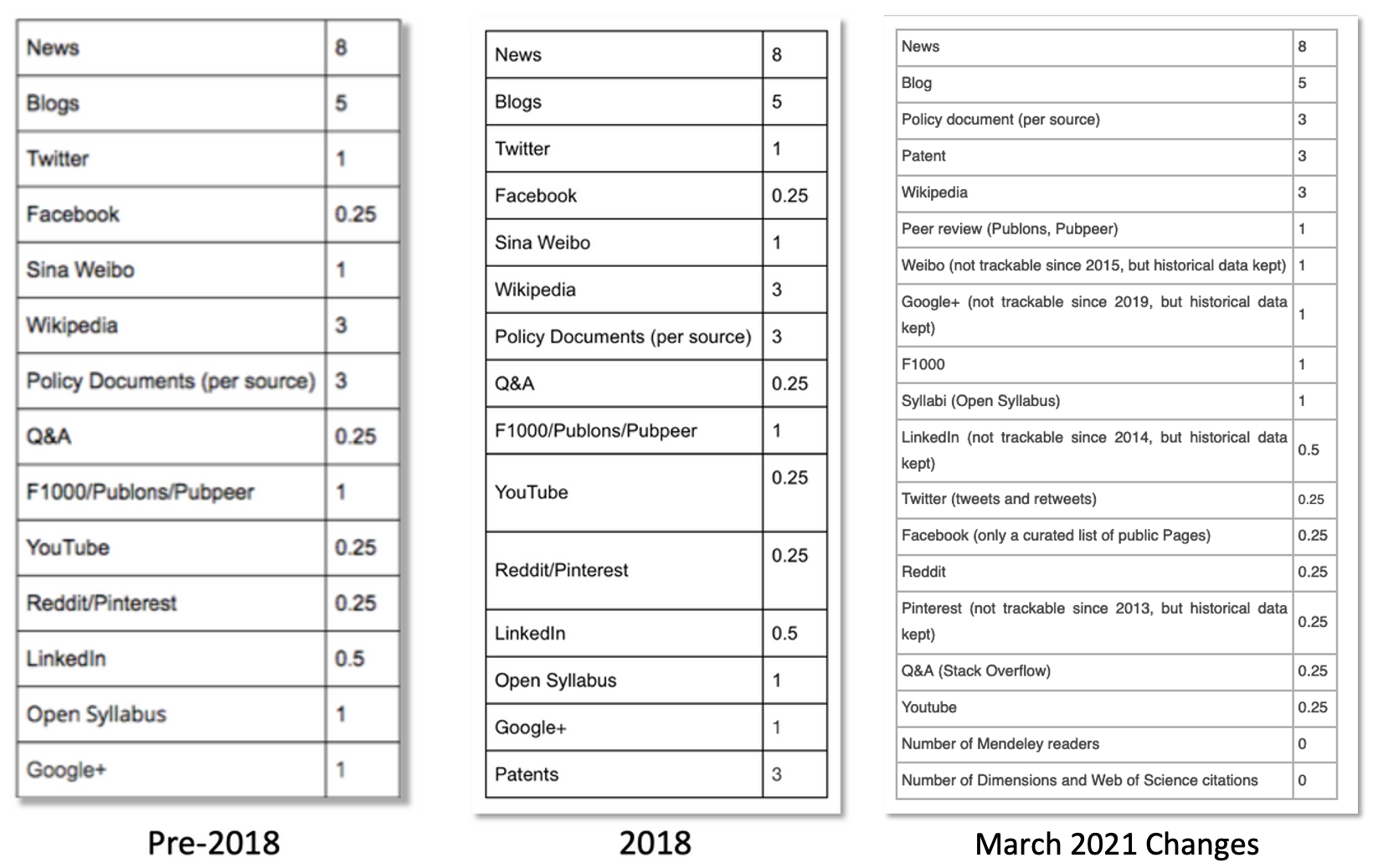

Altmetric has a long history of questionable scoring approaches, starting with a weighting system that was 86.9% dependent on Twitter activity. This gave Twitter inordinate influence over Altmetric scores.

There have also been lingering questions about the timeliness or existence of data from notoriously stingy sources like LinkedIn.

Yet, Altmetric boldly claimed to know the “Top 100 Articles” each year, and purveyed its visually questionable flower/donut without appearing concerned about data sourcing, weighting, or presentation issues apparent to anyone who looked under the hood.

Last week by accident, I discovered that in March 2021 — unannounced — Altmetric introduced a significant change to the Altmetric weighting system which cut the effect of Twitter by 75%. This major haircut of its most important source came in the midst of other changes, detailed below.

To confirm the changes weren’t announced, I contacted Altmetric and learned its own Customer Support Manager was unaware of the changes. I also contacted some well-informed people in scholarly publishing, and nobody had heard about the scoring modifications.

Over the years, because Altmetric doesn’t archive its pages, I’ve learned to use screengrabs to document the evolving Altmetric system. Please forgive any fuzziness to the images below, which compare pre-2018, 2018-2021, and current weighting tables:

As noted, before this change to the weighting (and possibly the ingestion practices) around Twitter data, Twitter accounted for 86.9% of an Altmetric score on average. That was clearly a poor early weighting decision that needed to be changed.

But maybe they could have told us?

I accidentally discovered this change when I was floated a list on social media of the “Most Influential Research Square Preprints of All Time” — a headline which strikes these ears as presumptuous in at least two ways (agency and plausibility).

Preprints with high Altmetric scores are described by Research Square as the “[m]ost widely circulated preprints,” another dubious assertion, as social sharing’s effect on reading or distribution is mostly unknown, while hidden sharing and meaningless virtue signaling go on all the time. But I digress . . .

Using these as a launch point, I tried to reproduce the scores using any of the systems Altmetric has presented over the years. I could not, which suggests a bit more about what may be going on here.

Now a Before/After Scoring System?

Not surprisingly given the way the changes were introduced, the scores I found aren’t reproducible using only the new scoring system. I tried three Research Square preprints from their lists (here, here, and here), and none reproduced.

Here are the Altmetric data I saw on one:

After entering the details under the donut into the scoring system, I could only come up with a score of 1381, not 3504. Using the 2018 system shot me well beyond the displayed score.

My best guess is that because the preprint in question was posted in June 2020, and Altmetric revised the scoring in March 2021, we are seeing both weighting systems at work, with the pre-2021 version giving way to the current version at some time uncertain. Running the article through the 2018 system generates a score of 4843, making 3504 about 72% of what the 2018 score would have been. It seems plausible that a mid-flight change in weighting could shave 28% off an overall score, especially with the largest and earliest historical source — Twitter — trimmed the most. (FYI, Twitter would have accounted for 95.3% of the preprint’s 2018 score if the weightings had been left unchanged.)

This would mean that Altmetric scores for “bridge” articles (or older articles that get a second life for some reason) would have mixed scoring affected by this. Yet, nothing I could find from Altmetric provides any details about what is being done. There is a lack of transparency, starting with the unannounced change in scoring back in March, and running through the available online resources at Altmetric.

This was a foreseeable issue, as I wrote about this in 2018:

The Altmetric weighting system has no versioning, and the implications of changes aren’t clear. There has been at least one revision to the Altmetric weighting system in the past two years, yet this is not reflected in any obvious manner, either with a versioning history or a statement of revision to scoring.

Fewer and Different Active Data Sources

The new approach also addresses some of Altmetric’s suspected data limitations. In its new table of weightings, Altmetric clarifies the following:

- Sina Weibo (now just Weibo) has not been trackable since 2015

- Google+ has not been trackable since 2019 (since it was closed)

- LinkedIn has not been trackable since 2014

- Pinterest has not been trackable since 2013

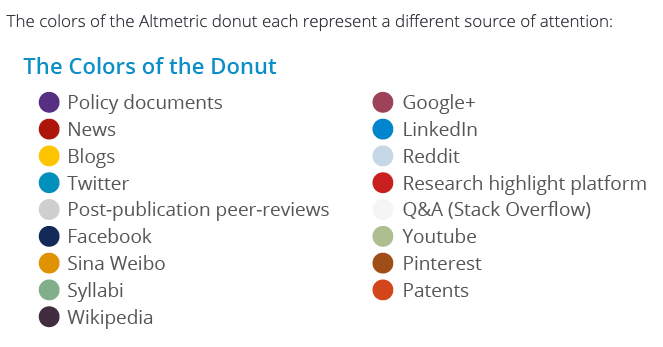

Despite these limitations and dead sources, lists on higher-level and more eye-catching pages make it look like every source is active and current, with no caveats or footnotes:

This is misleading. LinkedIn, Pinterest, Sina Weibo, and Google+ should be crossed off, or marked in some way, to avoid giving the impression that Altmetric is getting current data from these sources.

In the new scheme, Altmetric is clearer about what they track from Twitter and Facebook, using only tweets and retweets from the former, and “a curated list of public Pages” for the latter. Unpacking that phrase leads me to wonder who curated the list, how long it is, and what criteria went into creating it. I’d love to see it. Wouldn’t you?

Other changes increase the granularity of the data sources in ways that make you wonder if they’re strategic:

- Separating “F1000” from the category it now refers to as “Peer review,” with Publons and Pubpeer included. This is an interesting distinction to make, and one which may be controversial with F1000 fans. The scoring remains equivalent, however. (Is the granularity a competitive thing for Digital Science now that T&F owns F1000? Also, F1000Prime is now called “Faculty Opinions,” and highlights on Faculty Opinions are counted as F1000 data, despite Faculty Opinions not being listed as a distinct source.)

- Reddit is separated from Pinterest, likely as a result of confessing that Pinterest data haven’t been available since 2013, while Reddit data appear to be actively updated.

- Clarification that “Q&A” refers to Stack Overflow.

- Adding Mendeley readers and Dimensions/WoS citations with a weighting of zero (0) is curious, and suggests tracking without wanting to surface the data to the public. Yet, they do show at least two of these in displays, but count them as zeroes in the scoring. I wonder why . . .

Time to Move “Beyond the Donut”?

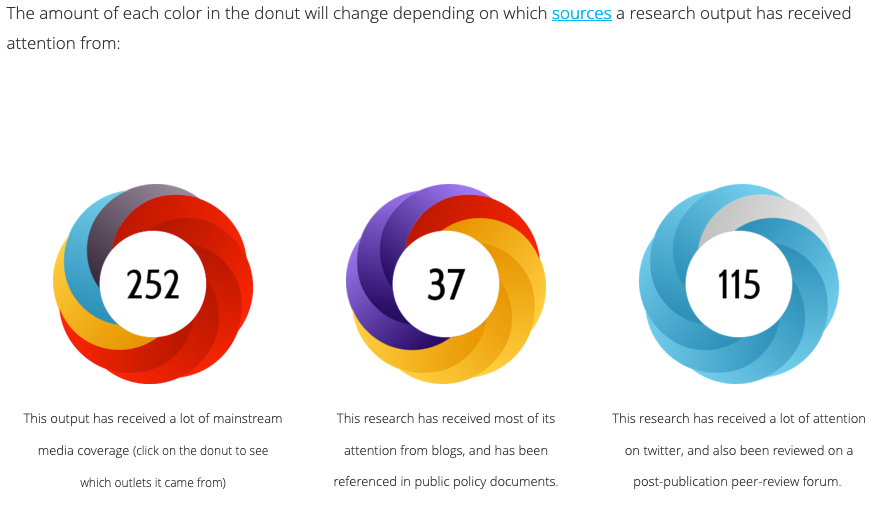

Altmetric also appears to have responded to criticism of their flower/donut by redesigning and adding an interpretive graphic to tell readers not to take the graphic too literally, but more as a guide to weighting outcomes:

To me, that raises the question of whether the motif of a multi-petal or -segmented presentation even makes sense. The whole package is a bit dodgy, and a simpler approach of a bar chart near the listings of coverage would be cleaner.

In 2018, Altmetric’s founder and first CEO — Euan Adie — left the company. Since then, Altmetric looks like a company slowly waking from a spell and sleepily fixing what had been broken. But is it fixing the right things fast enough and in a transparent manner? So far, the pace and communication appear to be sluggish.

Altmetric has strong technology, and the list of coverage is itself fascinating and useful. Why the company continues to push graphical and numerical distillations puzzles me, but with “metric” built into the name, it may be hard to pivot away. Not that it hasn’t been considered. In 2019, Altmetric itself contemplated dropping the numerical aspect of the score. This would be a good move. The service has a strong value proposition without it. The idea of altmetrics hasn’t aged well, and the prefix “alt-” is feeling like more and more of a liability. It may be time for the company to rebrand and create some space to reinvent itself.

An artificial, questionable, and troublesome scoring system doesn’t serve Altmetric or its users well. Dropping the metric aspect would mean fewer headaches, more substance, and more legitimacy long-term.

And announcing when you make a significant change to the underlying scoring system would increase trust.