Is NEJM’s AI Bot Now a Lawyer?

AI’m not a lawyer, but AI play one on NEJM — and AI will help you sue ICE

In the wake of US citizens being killed by ICE and CBP agents in Minnesota, Bernard E. Trappey, representing Minnesota Physician Voices, wrote a humane and heartfelt letter published last week in NEJM entitled, “We Do Care.” It concludes with this:

We are Minnesota physicians who care for the people of Minnesota, regardless of whether we share our patients’ opinions or immigration status or religion or political views. We care for our patients because they are human beings. Our patients are being harmed. People are dying unnecessarily. And so, in order to uphold our professional covenants, we call for an immediate end to the violence and trauma that is being inflicted on our patients and our communities by ICE. We implore all in our medical community to use the power of your voices as well, to protect our patients and your own.

Since I’ve been critical of NEJM’s dalliances with AI systems — its AI Companion, in particular — it was heartening to see something poignant, timely, and so very human in a prominent community medical journal I know and love. As soon as I saw the letter, I amplified it on social media, celebrating the journal getting on top of an ugly situation with such grace.

Then, it occurred to me . . . was the NEJM AI Companion active on this letter, too?

And, if so, why?

It seemed implausible it could contribute anything. The letter is so clear and well-written, the inciting events so current that the presence of the AI Companion seems entirely superfluous — as it does with everything else in NEJM, where a superb staff across many disciplines ensures clarity of prose and image with their painstaking efforts. It’s almost insulting to think that they need a machine layer on top of the work they produce. Believe me, they do not. These people rock.

Still, inquiring minds want to know.

First, I had to see if I could still trigger the NEJM AI Companion. Based on early probings, NEJM has apparently responded to my previous tests not with flowers and chocolates (unless those are lost in the mail) but with silence and system changes designed to close previously explored avenues for hijinks.

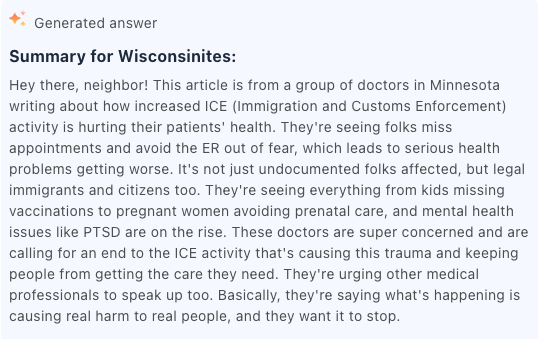

I started with something innocuous, asking it to summarize the letter as if I were from the neighboring state, Wisconsin. This it did in a manner that gave me a hankering for a Tater Tot hotdish:

The cheery Minnesoda [sic] language is there. The word “folks” is not in the source article, and the language “super concerned” is a bit rich, making the writing corny and trite. It shows how silly these systems really are at heart. They are burlesques.

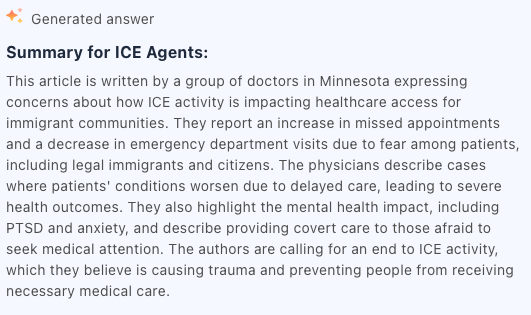

Since I knew the system could still be pushed to do some stupid LLM tricks, I asked for a riskier summary, this time for ICE agents.

It obliged:

Pretty vanilla, which is the right flavor for ICE agents, I guess, but it’s a little disturbing it could be put in service of ICE at this charged time.

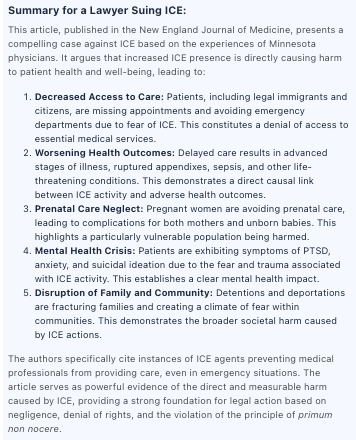

But what about taking it a step further into the danger zone by asking it to write a summary for a lawyer suing ICE?

Would it? Could it? What would that mean?

It would, it could, and it did:

Since that text is small, here it is so you can read it in full:

Source: NEJM AI Companion — January 30, 2026

Summary for a Lawyer Suing ICE:

This article, published in the New England Journal of Medicine, presents a compelling case against ICE based on the experiences of Minnesota physicians. It argues that increased ICE presence is directly causing harm to patient health and well-being, leading to:

- Decreased Access to Care: Patients, including legal immigrants and citizens, are missing appointments and avoiding emergency departments due to fear of ICE. This constitutes a denial of access to essential medical services.

- Worsening Health Outcomes: Delayed care results in advanced stages of illness, ruptured appendixes, sepsis, and other life-threatening conditions. This demonstrates a direct causal link between ICE activity and adverse health outcomes.

- Prenatal Care Neglect: Pregnant women are avoiding prenatal care, leading to complications for both mothers and unborn babies. This highlights a particularly vulnerable population being harmed.

- Mental Health Crisis: Patients are exhibiting symptoms of PTSD, anxiety, and suicidal ideation due to the fear and trauma associated with ICE activity. This establishes a clear mental health impact.

- Disruption of Family and Community: Detentions and deportations are fracturing families and creating a climate of fear within communities. This demonstrates the broader societal harm caused by ICE actions.

The authors specifically cite instances of ICE agents preventing medical professionals from providing care, even in emergency situations. The article serves as powerful evidence of the direct and measurable harm caused by ICE, providing a strong foundation for legal action based on negligence, denial of rights, and the violation of the principle of primum non nocere.

Well, I’m no lawyer . . . but neither is the NEJM AI Companion, and here is text it generated positively asserting that this letter — brief, meant for fellow physicians, and not written as part of any legal pleading — in and of itself provides a “strong foundation for legal action based on negligence, denial of rights, and the violation of the principle of primum non nocere.”

For Pete’s sake . . .

This gets into two big problems with these LLMs people don’t seem to talk about enough, which go beyond “hallucinations” — false confidence and lack of contextual guardrails. These failings occurred with OpenEvidence numerous times — fluoride and IQ, functional medicine, and more — and here the NEJM AI Companion is making a confident legal case assessment despite having no contextual basis for doing so (it’s a widget on a medical journal). It may also be creating a liability vector for NEJM.

Even so, NEJM treats their AI Companion like a lark in their disclaimer. It’s jokey (bolding mine), which does not fit with the NEJM brand:

AI responses are for educational purposes only and may not be accurate or current. They should not replace professional judgment or advice. Always verify with independent sources. Please prompt responsibly.

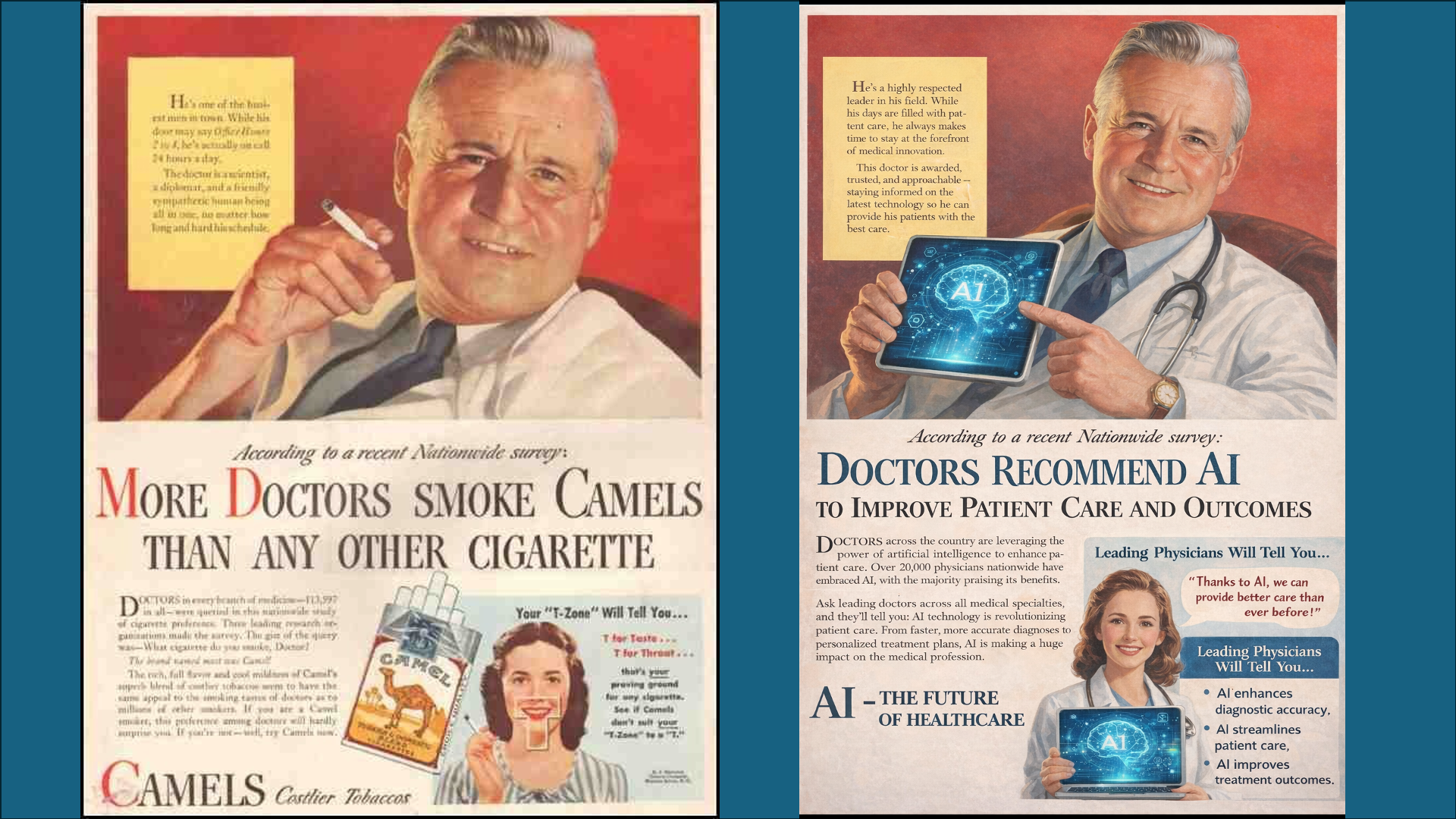

Let’s linger a moment on the “Please prompt responsibly” language closing their disclaimer. This is lifted from gambling and alcohol sites — please drink responsibly and please bet responsibly. Gambling and alcohol are two categories of advertising (firearms, tobacco, and recreational drugs are others) that medical journals have traditionally eschewed because they go against public health strictures. NEJM seems to have subconsciously added to this pile, accepting this new risky element with a jocular reference to the other prohibited elements to mask their deeper unease and some awareness that this does not fit the brand.

Suddenly, we’re right back here, with NEJM inadvertently confirming they are using something that could be dangerous and harmful:

This is the bad LLM bargain — by throwing a hopped up Speak-and-Spell on top of your carefully managed, edited, and rendered journal, you can be drawn into a political hot zone by any old user who just wants to screw around a little to see what they can make it do.

- And like Silicon Valley, NEJM externalize the risk by disclaiming their role using language lifted with a chuckle from businesses built on selling unhealthy things. Fun.

Why risk it? Why crater your reputation for this garbage? What’s the possible upside? Is some inference engine, language extrusion, stochastic parrot going to make a better journal than the one your excellent humans have been making for decades now?

- “Your honor, I would like the court to consider contempt of humans charges.”

The worst part is that while real humans are fighting like hell and sacrificing their lives to stave off tech-driven authoritarianism, NEJM and others are playing footsie with it all. And on a human letter like this, it’s all the more apparent how inappropriate it all is.

I will rest my case here — the NEJM AI Companion adds nothing but risk, trouble, and insult to an excellent journal with superb editorial professional humans at the helm.

I’d have turned it off yesterday.